About Me (邱盟竣)

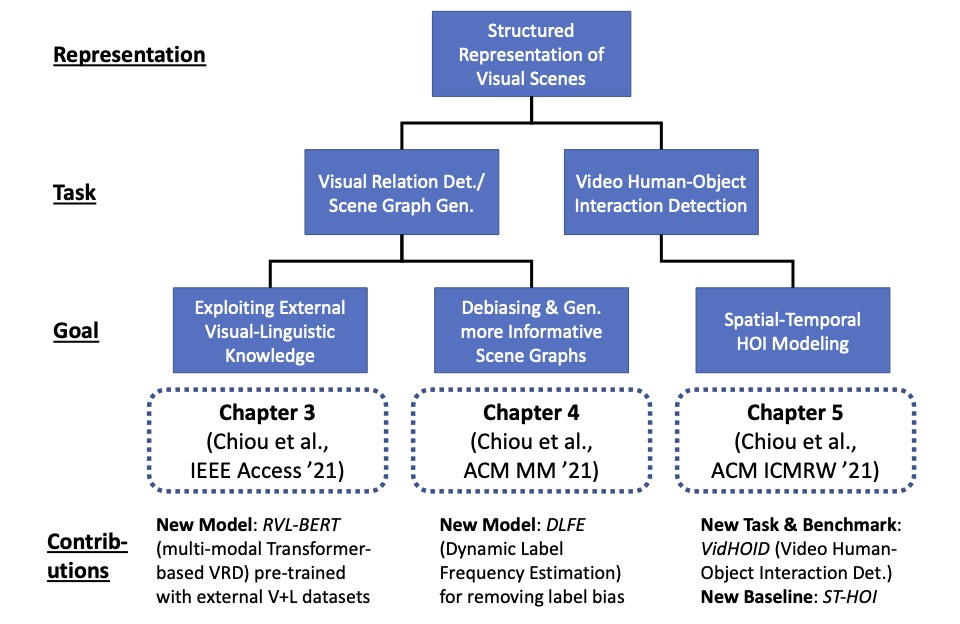

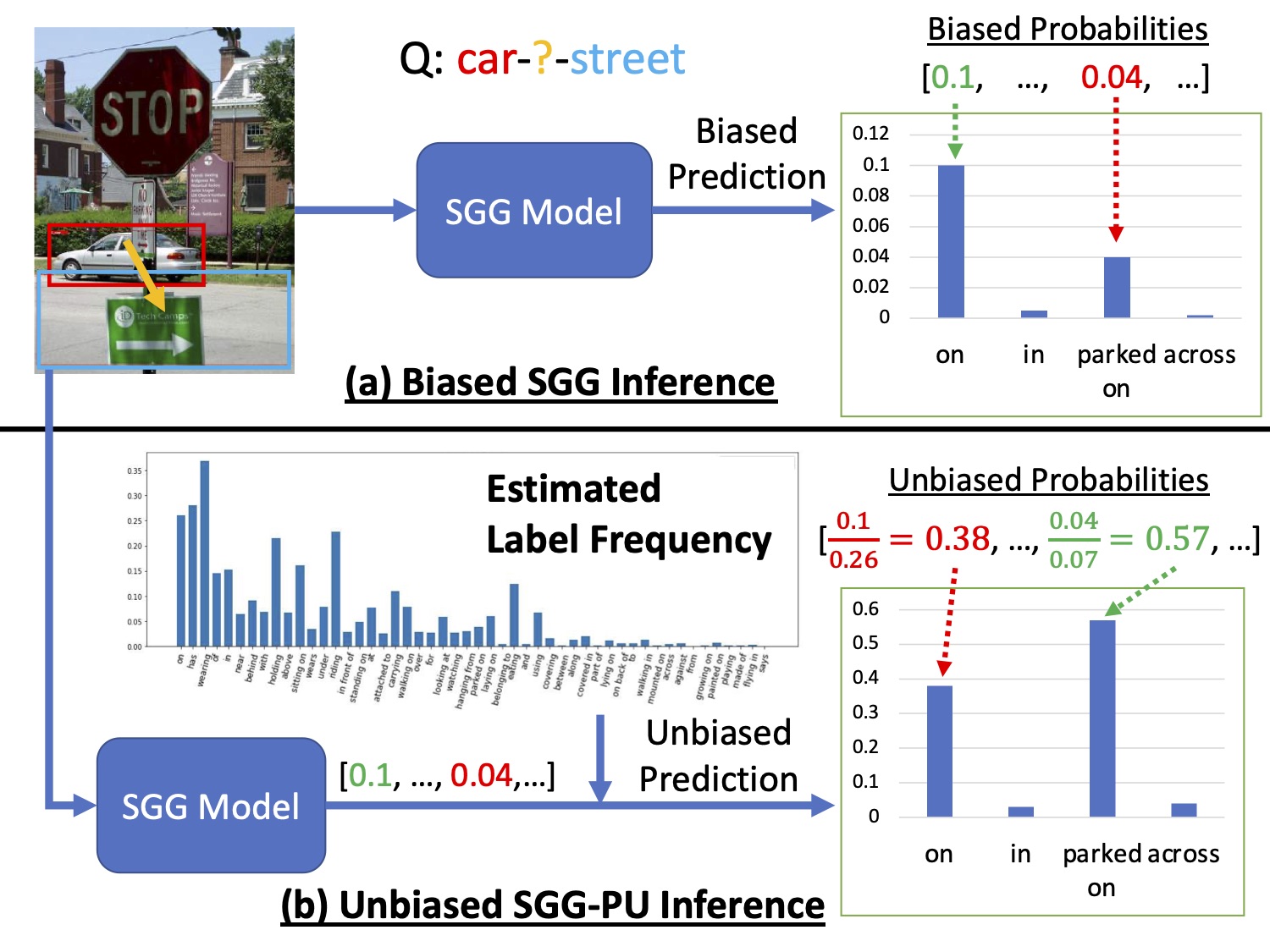

I am an applied scientist with the Smart Home AI team at Amazon. I received my Ph.D. degree (Computer Science) from the National University of Singapore in 2022, supervised by Prof. Roger Zimmermann and Prof. Jiashi Feng. I am interested in CV tasks including scene graph generation, video understanding, 3D CV, etc. I am also interested in and working on Large Language Models, Superevised Fine-Tuning and their applications in CV. I code mostly in Python and PyTorch.

Contact Details

Meng-Jiun Chiou (Applied Scientist)

Sunnyvale, CA

mengjiun.chiou [at] u.nus.edu